TensorFlow and High Level APIs

A great presentation on the upcoming release of TensorFlow v2 by Martin Wicke.

I got a chance to watch this great presentation on the upcoming release of TensorFlow v2 by Martin Wicke. He goes over the big changes - and there's a lot - plus how you can upgrade your earlier versions of TensorFlow to the new one. Let's hope the new version is faster than before! My video notes are below:

TensorFlow

Since it's release, TensorFlow (TF) has grown into a vibrant community

Learned a lot on how people used TF

Realized using TF can be painful

You can do everything in TF but what is the best way

TF 2.0 alpha is just been released

Do 'pip install -U --pre tensorflow'

Adopted tf.keras as high-level API (SWEET!)

Includes eager execution by default

TF 2 is a major release that removes duplicate functionality, makes the APIs consistent, and makes it compatible in different TF versions

New flexibilities: full low-level API, internal operations are accessible now (tf.raw_ops), and inheritable interfaces for variables, checkpoints, and layers

How do I upgrade to TensorFlow 2

Google is starting the process of converting the largest codebase ever

Will provide migration guides and best practices

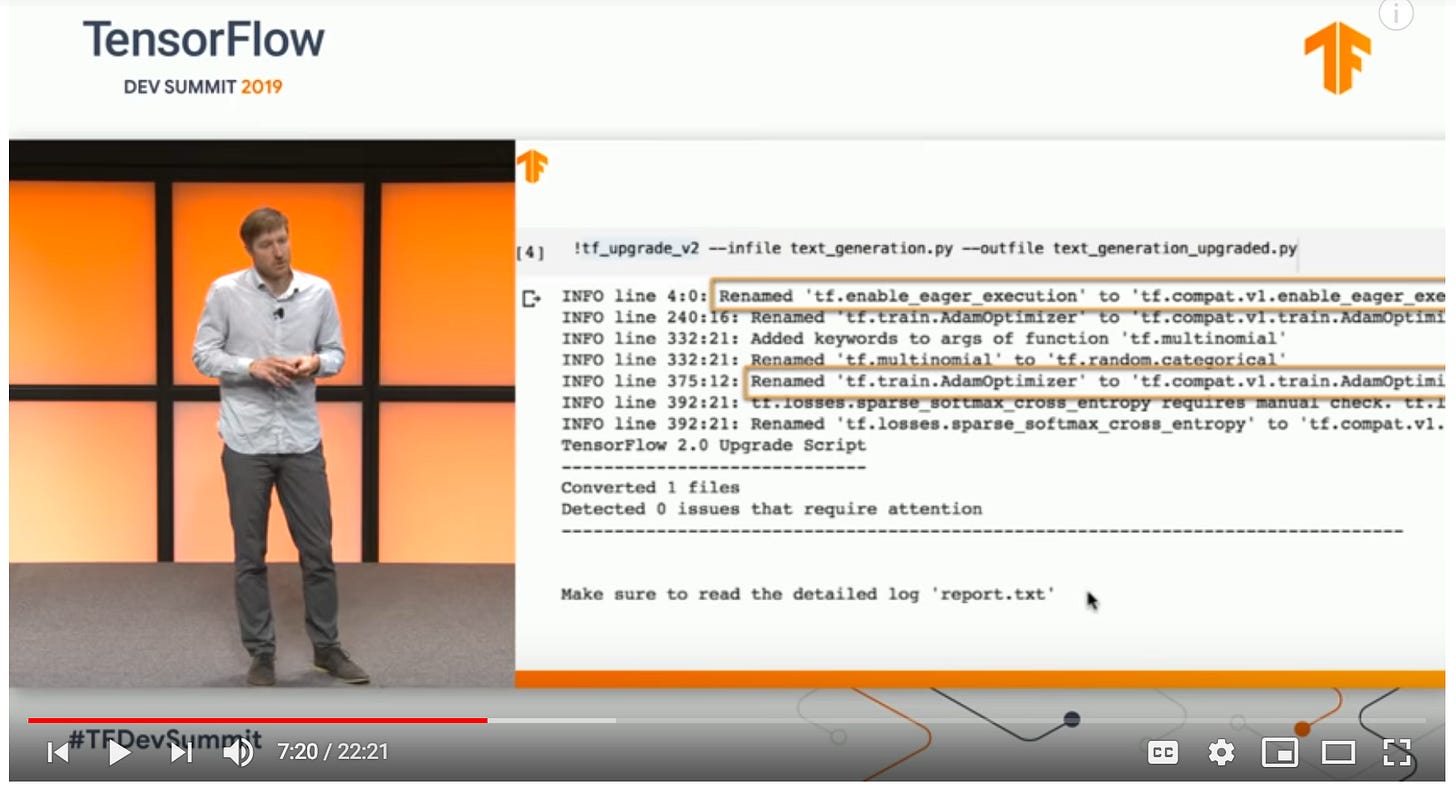

Two scripts will be shipped: backward compatibility and a conversion script

The reorganization of API causes a lot of function name changes

TensorFlow v2

Release candidate in 'Spring 2019' < might be a bit flexible in the timeline

All on GitHub and project tracker

Needs user testing, please go download it

Karmel Allison is an Engineering manager for TF and will show off high-level APIs

TF adopted Keras

Implemented Keras and optimized in TF as tf.keras

Keras built from the ground up to be pythonic and simple

Tf.keras was built for small models, whereas in Google they need HUGE model building

Focused on production-ready estimators

How do you bridge the gap from simple vs scalable API

Debug with Eager, easy to review Numpy array

TF also consolidated many APIs into Keras

There's one set of Optimizers now, fully scalable

One set of Metrics and Losses now

One set of Layers

Took care of RNN layers in TF, there is one version of GRE and LSTM layers and selects the right CPU/GPU at runtime

Easier configurable data parsing now (WOW, I have to check this out)

TensorBoard is now integrated into Keras

TF distribute strategy for distributing work with Keras

Can add or change distribution strategy with a few lines of code

TF Models can be exported to SavedModel using the Keras function (and reloaded too)

Coming soon: multi-node sync

Coming soon: TPU's

There's a lot in this 22 minute video about TensorFlow v2. Must watch.