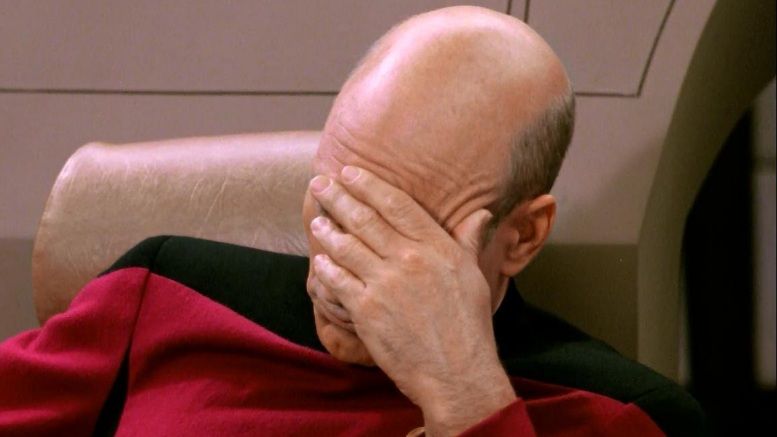

Whoops! This is Not The Page You Were Looking For

A more human 404 page with options you can choose from to find what you need.

I'm sorry that you got this page but it looks like the article, image, or asset you're trying to access isn't available anymore or I retired it. I did make a lot of changes recently so it could just be broken.

No worries friend! All is not lost! Try these steps to help you find what you're looking for:

- Try the search box at the top of the page

- Try our Tutorials or Money Making Online tags

- If all else fails, drop me a comment

Best wishes friend!